A vertical AI business has many of the same building blocks as any great technology company. While essential lessons and frameworks that have helped founders create industry-defining vertical software businesses still apply, they’re only part of the equation for those building the next vertical AI giant.

Vertical AI founders and teams are uncovering a bounty of largely untapped opportunities, and with these opportunities come novel considerations, challenges, and risks that warrant founders’ attention.

Vertical AI is in a very early chapter, but the good news for founders is that blueprints of success do exist. There’s a cohort of emergent vertical AI leaders whose founding predates the dawn of the AI era, such as EvenUp (founded in 2019), Subtle Medical (2017), Abridge (2018), and Fieldguide (2020) — companies led by prescient innovators who have identified areas where AI, with the right capabilities, could solve problems and drive ROI where past software companies couldn’t.

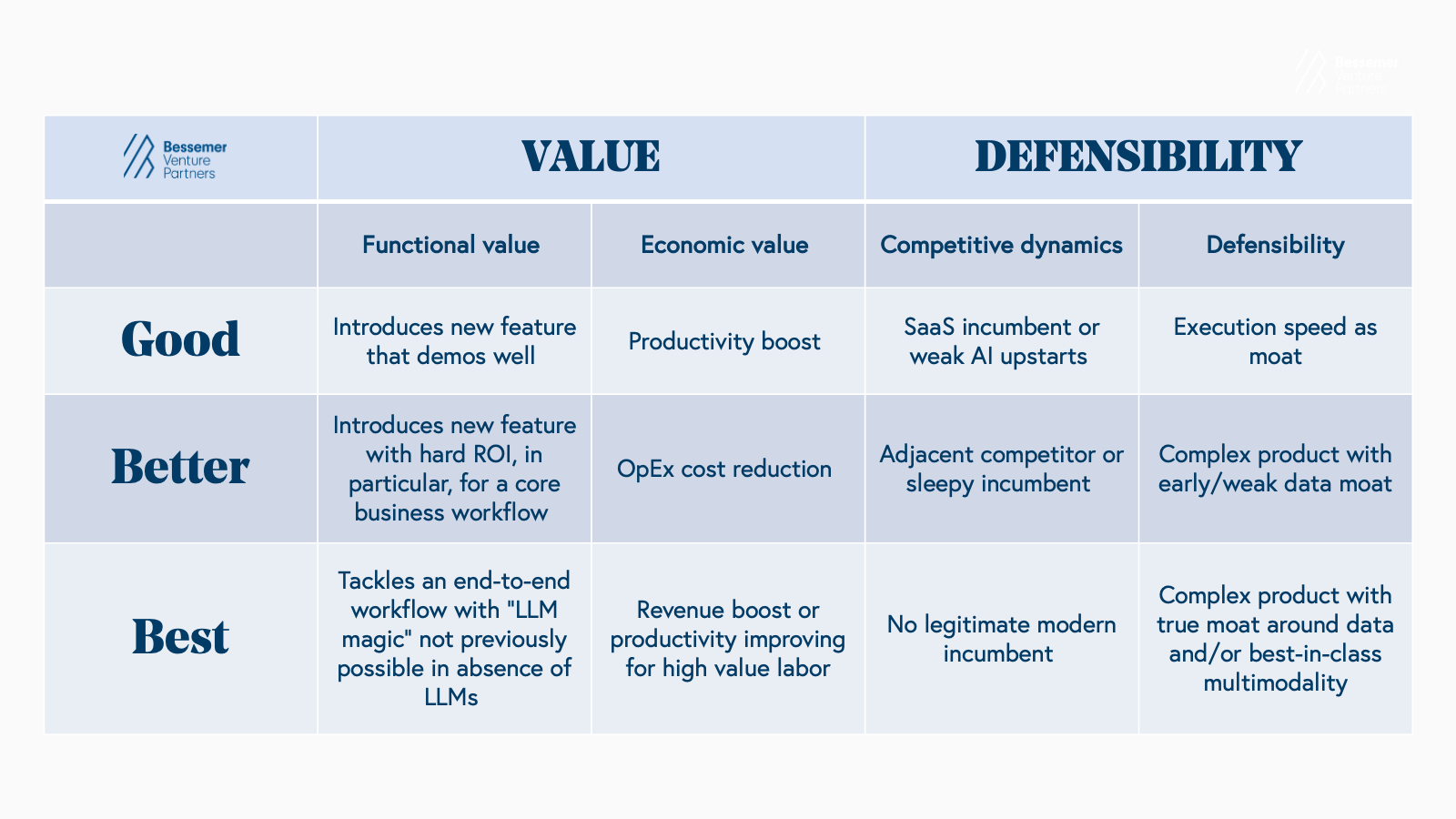

Through our partnership and analysis of some of the most valuable, innovative, and defensible vertical AI solutions to date, we’ve formulated initial guiding principles for building strong vertical AI businesses from the ground up.

In the fourth and final installment of our Vertical AI Roadmap, we share ten key principles focused on strengthening the functional value, economic value, competitive position, and defensibility of vertical AI products, services, and businesses. Plus, we offer founders advice on applying these high-level principles to take practical action starting at the earliest stages of company-building.

Key principles for building strong vertical AI businesses

- Customer-centric automation: Build solutions only where automation aligns with customer needs and context, not just possibility.

- Avoid commoditized features: Focus on differentiated, integrated workflows rather than features that competitors can easily replicate.

- Leverage AI for superhuman tasks: Identify and implement AI in areas where it can operate at scales or speeds unattainable by humans.

- Quantifiable ROI unlocks value: Demonstrate clear revenue gains or cost reductions to drive adoption and loyalty.

- Innovate on business models: Embrace new delivery methods and pricing enabled by AI automation to access broader market segments and expand margins.

- Target niche and underserved markets: Initial competitive advantage often lies in overlooked, high-ROI areas.

- Customize for nuanced requirements: Serve complex buyer needs (compliance, security, etc.) to erect defensible barriers.

- Technical moat comes from multimodality: Competitive edge increasingly depends on combining data types and workflow integrations, not proprietary models alone.

- Build modular and adaptable systems: Ensure tech infrastructure can flexibly incorporate the best models as AI evolves.

- Prioritize data quality over quantity: Early success depends on high-quality, relevant data, which compounds in value as the business scales

Want to read our entire Vertical AI Roadmap series? Dive in:

|

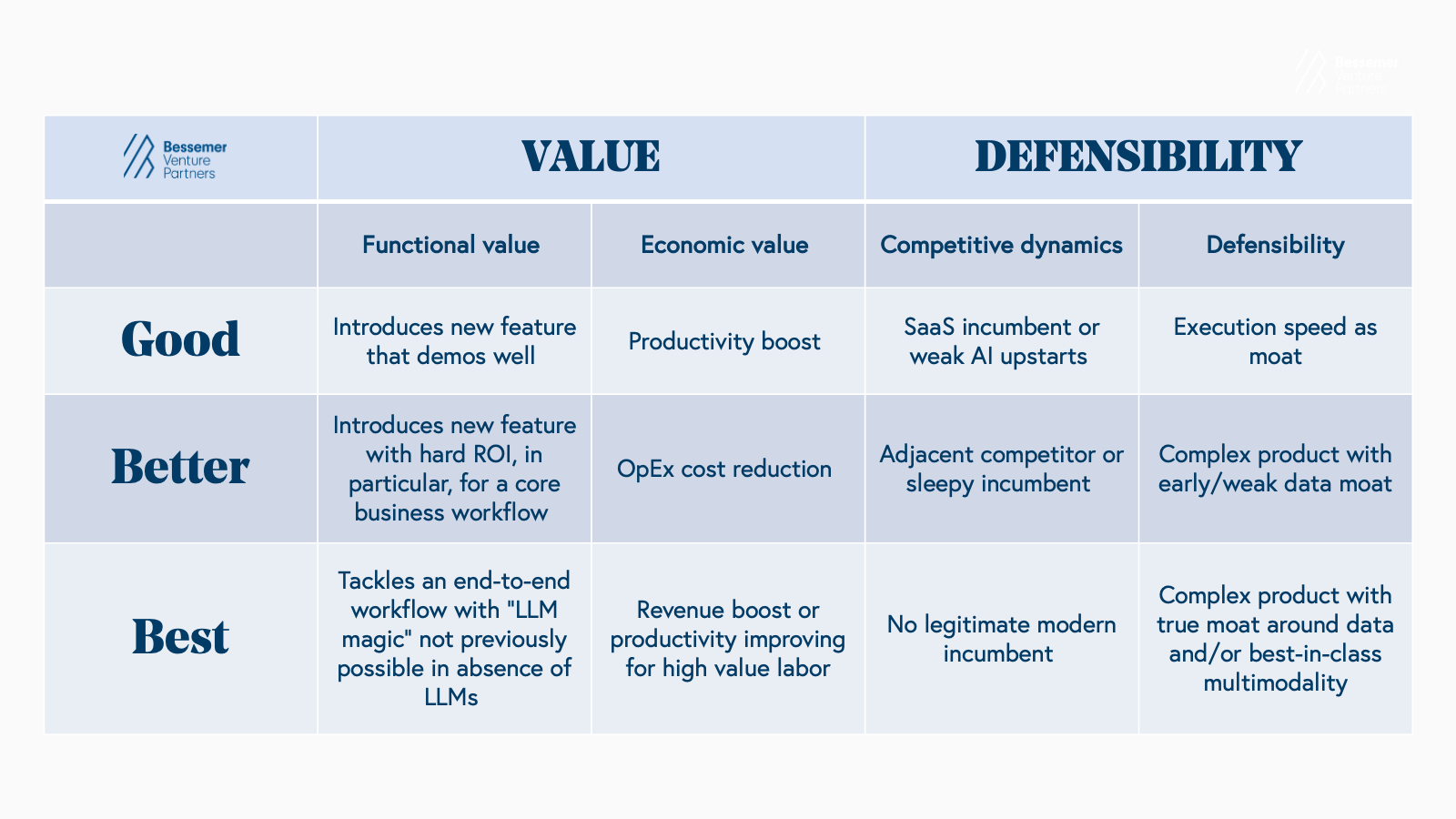

Our Vertical AI investment framework

Guiding principles for building vertical AI businesses

Functional value

1. Determine which workflows your target customers want to automate.

Core and supporting workflows across different sectors will have varying propensities for automation. However, a workflow’s propensity for automation — supporting or not — isn’t the only important consideration when deciding what to build. Customers will inevitably have different degrees of interest in automation or have strict, specific requirements for automation, including in cases where builders view a given workflow as a perfect use case for automation.

Sometimes, these preferences or requirements can be addressed in the design of your product. For example, a dental office might want to set the procurement of medical supplies on autopilot if the order is below a certain cost but still want to have a human review larger purchases. An AI procurement solution can bake in that flexibility by completely automating certain orders while bringing a human in the loop for others. As another example, a law firm might be comfortable completely automating its client payment workflows, but, when it comes to a core workflow like writing legal briefs, they use human-in-the-loop-feedback to create the final output — such as creating an initial draft — because of potential pushback from the end client or a desire to maintain control over the final product.

As always, thorough market and user research is key. For example, in healthcare, Abridge and others have seen a swift adoption of AI solutions that address administrative workflows, and that’s in large part because clinicians want to automate away administrative tasks such as scribing. And yet, while there’s also interest in current applications of multimodal AI for diagnostics, some solutions have seen less adoption because healthcare’s payment models have lagged behind its industry innovation.

The takeaway? Just because something can be automated doesn’t mean it should be or that the conditions are right, given the possibility of industry-specific bottlenecks and other speedbumps. Your customer’s guidance in where and how they want to use AI should always be your North Star.

2. Steer clear of easily commoditized applications.

Your vertical AI solution needs to differentiate itself in terms of what its core capabilities are — not just how well it executes those capabilities.

Certain solutions, such as data extraction and verification, will likely become table stakes, and building solutions with these kinds of easily replicable core value propositions will leave companies open to significant competitive pressure. For instance, we’ve seen increased accounts receivable and payable (AR/AP) automation solutions within financial services. While AI features for data matching and invoice reconciliation may offer some value, a solution that integrates these capabilities within a workflow tool that also creates tailored payment and membership plans for end customers would be more powerful and defensible — especially in the case of a vertical solution with sector-specific workflows.

To mitigate the risk of LLM commoditization, the best vertical AI applications will tackle an end-to-end workflow, and be tightly integrated with existing systems — regardless of whether the application is an end-to-end or single-point solution. Many B2B AI startups achieve the latter by partnering with established platforms, particularly large incumbents, to create value through seamless integrations.

For example, Sixfold, a generative AI leader for insurance underwriters, is designed to enhance underwriting capacity, accuracy, and transparency. Its solution is embedded as an API or plug-in within existing policy administration systems (PAS), eliminating the need for insurers to overhaul legacy systems or re-platform their workbench. This seamless integration allows underwriters to effortlessly introduce Sixfold’s capabilities directly into their daily workflows.

3. Look for opportunities for AI to do work impossible for humans to do.

AI has been touted for its ability to take over rote and mundane tasks and workflows from employees so they have more time for the specialized work that they were trained and hired to do. However, the vertical AI products that provide the greatest value are also supplying teams with capabilities that humans don’t have. One of the most common capabilities unique to AI is its ability to operate at scale.

For example,

Rilla, an AI company in the home services vertical, provides coaching to sales representatives by transcribing and analyzing their in-person interactions with customers and then delivering tailored feedback and advice on improving performance. Without Rilla, a sales manager would have to physically accompany reps to onsite visits, and that manager's understanding of performance and best practices would always be limited by the number of time-consuming “ride-alongs” they could complete. Rilla, on the other hand, can audit high volumes of conversational data from reps across the company, meaning that the coaching it provides to sales reps is based on orders of magnitudes more data than a human could ever review individually.

There’s a reason why certain industries like sales and marketing, services, and legal lend themselves to the magic of AI and LLMs: success in these categories requires both producing and deriving insights from large volumes of written text and, in some cases, audio recordings — time-consuming work that AI solutions can take the first pass, or even take over completely.

Economic value

4. The most valuable solutions unlock revenue.

Demonstrating your AI solution’s hard ROI (either dollars saved or gained) for customers can expedite sales cycles considerably and increase customer retention. Solutions that improve profit margins by driving new revenue and/or reducing spend not only become an integral part of a customer’s workflow but also of the performance of that customer’s business.

One type of hard ROI is dollars saved via cost containment. For example, Abridge, which automates note-taking for doctor-patient interactions (among other things), has been able to show how much its platform is increasing doctor job satisfaction and, as a result, doctor retention. By driving retention, Abridge substantially mitigates the cost of recruiting and training physicians — costs which often amount to millions, if not tens of millions, annually.

On top of cost containment, Abridge contributes to new revenue by freeing up one to two hours daily per physician. These extra hours enable physicians to see more patients, directly boosting the hospital’s operational efficiency and generating revenue that wouldn’t be generated in the absence of the solution. Abridge’s detailed transcripts and summaries of each patient visit also prevent revenue leakage by ensuring comprehensive coding and billing.

EvenUp is another vertical AI company that directly increases its customers’ revenue by freeing up time, in this case, for legal professionals. Using a combination of the latest LLM technology and proprietary models (PiAi), EvenUp generates demand packages for personal injury law firms in a fraction of the time it would take a paralegal (who needs days to gather data from the client, collate hundreds of documents, extract data from medical and police reports, etc.). Because EvenUp’s legal operations team reviews each letter, law firms can maintain a high-quality standard and still drastically reduce (or eliminate) the time that their team spends on-demand packages. This additional time allows firms to take on more cases which increases revenue.

5. Novel business models can unlock new opportunities.

Vertical AI solutions are being

delivered and priced in novel ways, which has opened up new verticals for automation — verticals where there wasn’t sufficient TAM to build a traditional software business. This is especially true of the AI-enabled services model, where AI automates tasks to deliver potentially faster, cheaper, and more consistent services than legacy service providers.

Because of the lower cost structure inherent to automation, in 2024 we see, on average, a ~56% gross margins on a 1.6x average burn ratio in our vertical AI portfolio, which includes a significant number of services companies. Historically, services businesses have been hard to make profitable because of the costs of specialized workers, but with AI, specialized workers can play a more limited role, focused on quality control and reinforcement learning from human feedback (RLHF).

Some AI services products will be better delivered with human QA support, while others will be equally or more effective with an internal AI-powered product as the core service offering. The best way to deliver your AI solution will ultimately depend on the customer you’re serving and their comfort level engaging products and services offering various degrees of automation, as well as other considerations.

Competitive dynamics

6. Build for overlooked categories and workflows.

Compared to broader horizontal categories like sales or marketing where scaled and well-resourced competitors (such as Salesforce or ADP) already exist, founders will typically find less incumbent pressure in vertical categories — especially under-the-radar verticals where few innovative companies are operating. While it’s ideal to claim the first-mover advantage in a greenfield environment, most vertical categories will already have at least one incumbent. However, when incumbents are stretched thin or slow to integrate AI, fast-moving startups can gain a competitive edge by building superior, high-ROI AI products and services that address workflows where automation is a valuable but non-obvious solution, either because it’s an unconventional use case or one where it’s difficult to execute with AI successfully.

7. Serve customers with nuanced needs.

Vertical AI companies can further differentiate by targeting customers within overlooked categories that have particularly complex requirements and nuanced needs that can’t be easily met by an AI solution. For example, an AI startup serving banks or government contractors would need to build sector-specific security and compliance tooling to sell to these buyers, which would add another vector of defensibility to the solution. To mitigate LLM commoditization risk, we will likely begin to see foundational model players, such as OpenAI and Anthropic, go off to build models that support very specific and specialized use cases for customers in industries like these.

8. Models aren’t a reliable moat — but multimodality can be.

As model infrastructure costs continue to plummet, models will cease to be a moat, and early vertical AI founders will need to ask themselves: "Why is the product we’re building with AI going to be superior to what can be built with publicly available models and data?”

Constructing your technical architecture to address the specific problem you’re solving is a start; for example, fine-tuning LLMs to better reflect a customer’s writing style or using Retrieval-augmented generation (RAG) to better execute information retrieval. (We believe using RAG for industry-specific datasets is a foundational level of defensibility.)

But we expect that additional layers of defensibility will be found in solutions that have the capabilities to address more complex — and particularly multimodal — workflows. For example,

Jasper, the AI marketing platform, also went multimodal when they acquired Clickdrop for image generation, and then built additional enterprise marketing capabilities, including Brand Voice, and Campaign Calendaring.

Defensibility

9. Focus on modularity and scalability in your model stack.

Unlike traditional SaaS businesses, where companies built on similar permutations of a standard technology stack, vertical AI companies need to build with bespoke, best-in-class infrastructure and LLMs in lockstep with the pace of innovation in AI — or else risk getting left behind. Teams can stay nimble by building internal capabilities to flexibly combine open-source, fine-tuned, and proprietary models so that your tech achieves the best outcome for your customer.

This approach allows you to move and execute quickly in a rapidly developing infrastructure landscape and take a plug-and-play approach to your technical architecture. If you can get the same quality result by taking an open-source LLM and fine-tuning it, it’s not worth building a model from scratch (especially if you would otherwise end up with technical debt when the market or tech changes). This approach will also allow you to put your resources towards what matters most: getting a superior product to your customer. It’s very unlikely that your customers will care about the details of the underlying model as long as the solution that you provide gets the job done better than any alternative available to them.

Again, a great example of a product built for flexibility is Jasper, the platform that sits in the nucleus of the marketing tech stack and acts as an “AI brain” to help users formulate, design, and execute initiatives across all marketing specialties. The Jasper team has architected a modular platform that uses multiple LLMs. It runs marketing inputs through several LLMs depending on the customer need, model performance, and cost. For example, if Claude 3.5 outperforms GPT-4 for a particular use case, Jasper’s product architecture is flexible enough to support interchangeable model infrastructure.

10. Don’t over-index on the quantity of data — it’s the quality that counts.

Much has been said about the importance of proprietary data sets as a key to defensibility. As an early-stage startup in a new category, builders likely can’t get the volume they’re looking for. However, high-quality data (of any amount) will have a compounding effect, benefitting the company more and more over time.

For example, in the early days of EvenUp, the team dramatically and consciously invested in legal operations to have humans-in-the-loop to review all demand letters; this was a case where data scale or mass didn’t matter as much as data quality and honing the model with feedback over time to improve the product.

What matters most at the early stage is building a solid, high-ROI initial product that’s meeting your core customer’s pain points and flying off the shelves, so getting initial quality data for models needs to happen without compromising fast-paced execution, especially if you’re trying to be first to market. With usage at scale, proprietary data will come, and then that data can be used to strengthen your product.

As another example, an AI-powered marketplace that automates the creation of requests for proposals (RFPs) for vendors could use historical and market data that comes as buyers and sellers get added to the platform, and that data could be used to produce RFPs that are more likely to win business. But when your marketplace is just getting started, your ability to surface good business opportunities for vendors and surface quality vendors for buyers will matter most.

The massive opportunity for Vertical AI takes shape

What continues to astonish us at Bessemer is the sheer scale of vertical AI businesses and their potential to surpass their vertical SaaS predecessors. This massive growth is driven, in part, by Vertical AI’s ability to access labor and services budgets rather than being confined to traditional IT software budgets.

Vertical AI solutions are reimagining systems of record as systems of work, offsetting future headcount growth, and amplifying productivity by equipping employees with entirely new capabilities. Unlike vertical SaaS, which typically captures only a fraction of a Fortune 500’s IT spend, Vertical AI taps into the labor line of a P&L, accessing exponentially larger budgets.

Emergent leaders in this category have already demonstrated how Vertical AI can transform underserved, service-heavy industries by automating essential workflows and delivering value where prior software solutions fell short. These companies are tangible case studies for vertical AI businesses yet to be started, and through them, aspiring founders can learn powerful lessons that they likely couldn’t find in a book doled out in business school.

Our entire Vertical AI roadmap stems from deep partnerships and hundreds of conversations with entrepreneurs, as well as extensive research and decades of experience investing in vertical software. However, we must not mistake the existence of today’s frameworks and strategies as a sign that the category is mature. Vertical AI is still in its infancy. Opportunities remain vast, and the landscape will only grow more dynamic as advancements in generative AI and foundation models open new frontiers.

Many of tomorrow’s Vertical AI titans are being built today — but countless others are still ideas waiting to be imagined and brought to life.

If you’re working on a Vertical AI application, we would love to hear from you! Please reach out to our team at VerticalAI@bvp.com.

Part IV: Ten priniciples for building strong vertical AI businesses was authored by partners, vice presidents, and investors at Bessemer Venture Partners. Drawing on their deep industry expertise and extensive portfolio insights, the writers provide a forward-looking analysis of how vertical AI startups are reshaping traditional SaaS markets by focusing on industry-specific, language-intensive workflows. Their perspective combines data-driven research with firsthand observations from startups and market trends to outline the emerging opportunities and challenges within the vertical AI landscape.